Hi, my name is

Soufiane Hayou.

I use Maths to understand AI.

About Me

I am currently an Assistant Professor at the department of Applied Mathematics and Statistics at Johns Hopkins University. I am also a faculty member at the Data Science and AI Institute where we investigate foundations and applications of AI. Previously, I spent some time as a researcher at Simons Institute (UC Berkeley) and was a member of the Collaboration on Foundations of Deep Learning. Before that, I was a faculty member at the Department of Mathematics at National University of Singapore. I obtained my PhD in Statistics & Machine learning at the University of Oxford. Before starting my PhD, I graduated from Ecole Polytechnique in France with a major in Applied Mathematics (MSc and Engineering Diploma). I also have a MSc in Quantitative Finance from Paris VI University (DEA El Karoui).

I like to scale up things! My main research focuses on efficient learning at scale, notably efficient pretraining and finetuning of LLMs. More generally, I am interested in learning/functioning dynamics of large scale systems . I am also broadly interested in the following topics:

- Mathematics of Large Scale Systems

- Data: Synthetic Data Generation, Data Pruning, Data Augmentation

- Trustworthy AI in the LLM era: Privacy, Hallucination, etc.

- AI for X in {Math, Life sciences, AI for Education}

🎓 For prospective students:

you can check this summary of my research interests to learn more about my work in AI.

Publications and Preprints

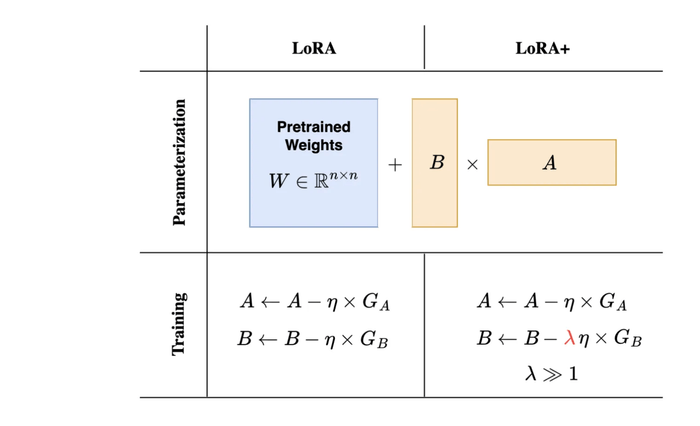

LoRA+: Efficient Low Adaptation of Large Models

We show that conventional Low Rank Adaptation (LoRA) leads to suboptimal finetuning of models with large width (embedding dimension). This is due to the fact that adapter matrices A and B in LoRA are updated with the same learning rate. Using scaling arguments for large width networks, we demonstrate that using the same learning rate for A and B does not allow efficient feature learning. We then show that this suboptimality of LoRA can be corrected simply by setting different learning rates for the LoRA adapter matrices A and B with a well-chosen ratio. We call this proposed algorithm LoRA+. In our extensive experiments, LoRA+ improves performance (1-2 % improvements) and finetuning speed (up to ∼ 2X SpeedUp), at the same computational cost as LoRA.

LoRA+: Efficient Low Adaptation of Large Models. ICML 2024. Soufiane Hayou, Nikhil Ghosh, Bin Yu. Paper, Blog.

- Fine-Tuning, Large Language Models, Low-Rank Adaptation

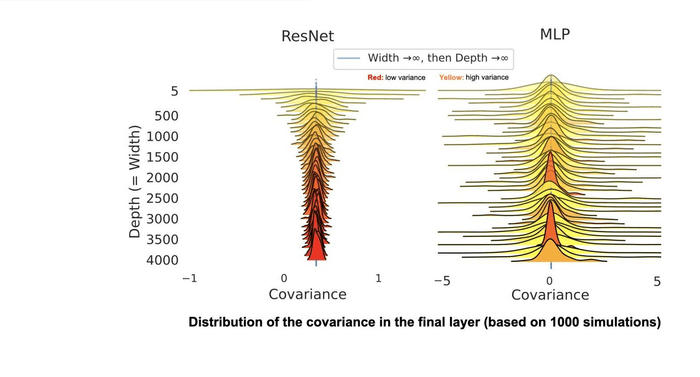

Width and Depth Limits Commute in Residual Networks

We show that taking the width and depth to infinity in a deep neural network with skip connections, when branches are scaled by 1/sqrt{depth} (the only nontrivial scaling), result in the same covariance structure no matter how that limit is taken. This explains why the standard infinite-width-then-depth approach provides practical insights even for networks with depth of the same order as width. We also demonstrate that the pre-activations, in this case, have Gaussian distributions which has direct applications in Bayesian deep learning. We conduct extensive simulations that show an excellent match with our theoretical findings.

Width and Depth Limits Commute in Residual Networks. ICML 2023. Soufiane Hayou, Greg Yang. Link

- Residual Neural Networks, Infinite Width, Infinite Depth

Feature Learning and Signal Propagation in DNNs

During the training of deep neural networks, some layers align much more with data compared to other layers (where the alignment is defined as the euclidean product of the tangent features matrix and the data labels matrix). The curve of the alignment as a function of layer index (generally) exhibits an ascent-descent pattern where the maximum is reached for some hidden layer. In this work, we provide the first explanation for this phenomenon. We introduce the Equilibrium Hypothesis which connects this alignment pattern to signal propagation in deep neural networks. Our experiments demonstrate an excellent match with the theoretical predictions.

Feature Learning and Signal Propagation in Deep Neural Networks. ICML 2022. Yizhang Lou, Chris Mingard, Yoonsoo Nam, Soufiane Hayou. Link

- Feature Learning in Deep Neural Networks, Signal Propagation

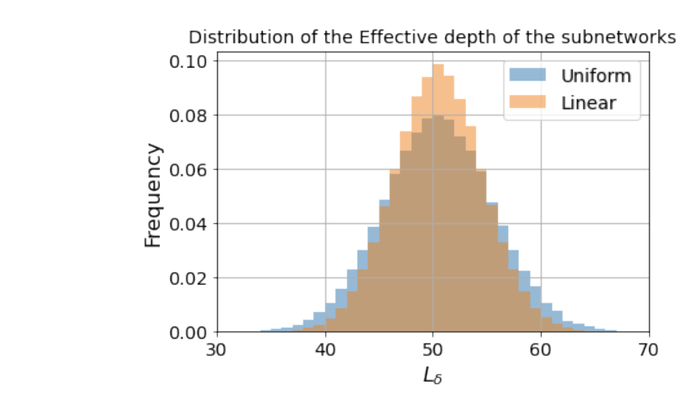

ResNets and Stochastic Depth

Regularization plays a major role in modern deep learning. From classic techniques such as L1,L2 penalties to other noise-based methods such as Dropout, regularization often yields better generalization properties by avoiding overfitting. Recently, Stochastic Depth (SD) has emerged as an alternative regularization technique for residual neural networks (ResNets) and has proven to boost the performance of ResNet on many tasks. Despite the recent success of SD, little is known about this technique from a theoretical perspective. This paper provides a hybrid analysis combining perturbation analysis and signal propagation to shed light on different regularization effects of SD. Our analysis allows us to derive principled guidelines for the choice of the survival rates used when training with SD.

Regularization in ResNet with Stochastic Depth. NeurIPS 2021. Soufiane Hayou, Fadhel Ayed. Link

- Residual Neural Networks, Regularization

Stable ResNet

Deep ResNet architectures have achieved state of the art performance on many tasks. While they solve the problem of gradient vanishing, they might suffer from gradient exploding as the depth becomes large ( Yang et al. 2017). Moreover, recent results have shown that ResNet might lose expressivity as the depth goes to infinity ( Yang et al. 2017, Hayou et al. 2019). To resolve these issues, we introduce a new class of ResNet architectures, called Stable ResNet, that have the property of stabilizing the gradient while ensuring expressivity in the infinite depth limit.

- Stable ResNet. AISTATS 2021 (Oral presentation). Soufiane Hayou, Eugenio Clerico, Bobby He, George Deligiannidis, Arnaud Doucet, Judith Rousseau. Link

- Residual Neural Network

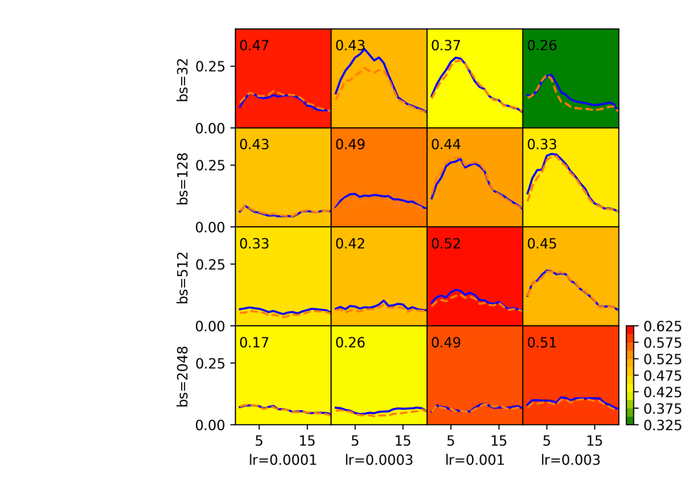

Model Compression at Initialization

Overparameterized Neural Networks (NN) display state-of-the art performance. However, there is a growing need for smaller, energy-efficient, neural networks to be able to use machine learning applications on devices with limited computational resources. A popular approach consists of using pruning techniques. While these techniques have traditionally focused on pruning pre-trained NN , recent reasearch have shown promising results when pruning at initialization. In this paper we provide a comprehensive theoretical analysis of pruning at initialization and training of sparse architectures. This allows us to propose novel principled approaches which we validate experimentally on a variety of NN architectures.

Robust Pruning at Initialization. ICLR 2021. Soufiane Hayou, Jean-Francois Ton, Arnaud Doucet, Yee Whye Teh. Link

- Neural Network Pruning

Initialization and Activation Function

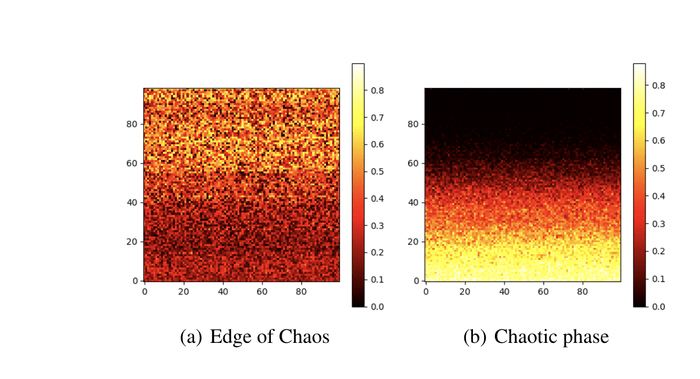

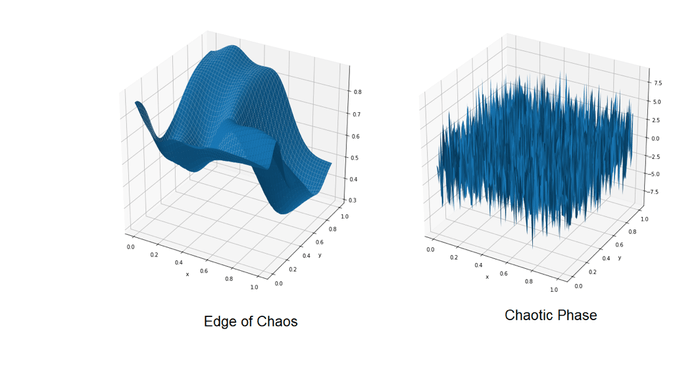

The figures on the side represent the output of a randomly initialized neural network of depth 100 and width 100 with Tanh activation function and input space [0,1]x[0,1]. When the network is initialized on the Chaotic phase, the output function is discontinuous almost everywhere (in the limit of infinite width), in this case, two inputs that are close (in euclidean distance) have very different output values. On the other hand, an initialization known as the Edge of Chaos yields smooth regular output functions. In this paper, we give a comprehensive analysis of the Edge of Chaos initialization and show the critical role of the smoothness of the Activation function in deep neural networks training.

On the Impact of the Activation function on Deep Neural Networks Training. ICML 2019. Soufiane Hayou, Arnaud Doucet, Judith Rousseau. Link

- Deep Neural Network Training

Full list

Please check my resume for the most up-to-date list of publications and preprints.

♦ A Proof of Learning Rate Transfer under μP. AISTATS 2026 (Oral presentation). Soufiane Hayou. Link

♦ PLoP: Precise LoRA Placement for Efficient Finetuning of Large Models. ICLR 2026. Soufiane Hayou, Nikhil Ghosh, Bin Yu. Link

♦ Disentangling Rich Dynamics from Feature Learning: A Framework for Independent Measurements. ICLR 2026. Yoonsoo Nam, Nayara Fonseca, Seok Hyeong Lee, Chris Mingard, Niclas Goring, Ouns El Harzli, Abdurrahman Hadi Erturk, Soufiane Hayou, Ard A. Louis. Link

♦ Maximizing the Potential of Synthetic Data: Insights from Random Matrix Theory. ICLR 2025. Aymane El Firdoussi, Mohamed El Amine Seddik, Soufiane Hayou, Reda Alami, Ahmed Alzubaidi, Hakim Hacid. Link

♦ The Impact of Initialization on LoRA Finetuning Dynamics. NeurIPS 2024. Soufiane Hayou, Nikhil Ghosh, Bin Yu. Link

♦ LoRA+: Efficient Low Rank Adaptation of Large Models. ICML 2024. Soufiane Hayou, Nikhil Ghosh, Bin Yu. Paper, Blog

♦ Commutative Width and Depth Scaling in Deep Neural Networks. JMLR, 2024. Soufiane Hayou. Link

♦ How Bad is Training on Synthetic Data? A Statistical Analysis of Language Model Collapse. COLM 2024. Mohamed El Amine Seddik, Suei-Wen Chen, Soufiane Hayou, Pierre Youssef, Merouane Debbah. Link

♦ Tensor Programs VI: Feature Learning in Infinite Depth Neural Networks. ICLR 2024. Greg Yang, Dingli Yu, Chen Zhu, Soufiane Hayou. Link

♦ Leave-one-out Distinguishability in Machine Learning. ICLR 2024. Jiayuan Ye, Anastasia Borovykh, Soufiane Hayou, Reza Shokri. Link

♦ Width and Depth Commute in Residual Networks. ICML 2023. Soufiane Hayou, Greg Yang. Link

♦ Data Pruning and Neural Scaling Laws: Fundamental Limitations of Score-based Algorithms. Transactions on Machine Learning Research (2023). Fadhel Ayed, Soufiane Hayou. Link

♦ On the infinite-depth limit of finite-width neural networks. Transactions on Machine Learning Research (2022). Soufiane Hayou. Link

♦ Feature Learning and Signal Propagation in Deep Neural Networks. ICML 2022. Yizhang Lou, Chris Mingard, Yoonsoo Nam, Soufiane Hayou. Link

♦ From Optimization Dynamics to Generalization Bounds via Lojasiewicz Gradient Inequality. Transactions on Machine Learning Research (2022). Fusheng Liu, Haizhao Yang, Soufiane Hayou, Qianxiao Li. Link

♦ The curse of (non) convexity: The case of an Optimization-Inspired Data Pruning algorithm. NeurIPS ICBINB Workshop 2022. Fadhel Ayed, Soufiane Hayou. Link

♦ Regularization in ResNet with Stochastic Depth. NeurIPS 2021. Soufiane Hayou, Fadhel Ayed. Link

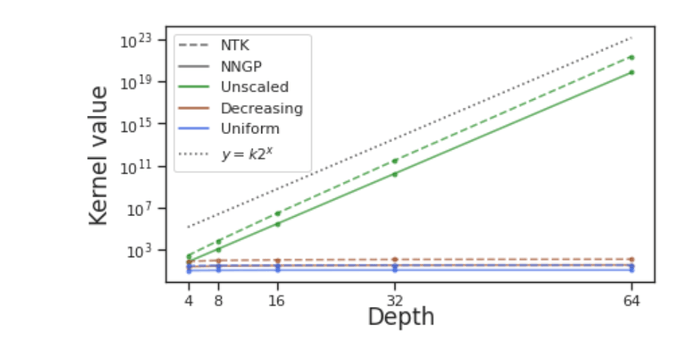

♦ The Curse of Depth in Kernel Regime. Neurips 2021 ICBINB workshop (Spotlight). Proceedings of Machine Learning Research. Soufiane Hayou, Arnaud Doucet, Judith Rousseau.Link.

♦ Probabilistic fine-tuning of pruning masks and PAC-Bayes self-bounded learning (2021, ArXiv). Soufiane Hayou, Bobby He, Gintare Karolina Dziugaite. Link.

♦ Stochastic Pruning: Fine-Tuning, and PAC-Bayes bound optimization. NeurIPS 2021 Bayesian Deep Learning Workshop. Soufiane Hayou, Bobby He, Gintare Karolina Dziugaite. Link.

♦ Robust Pruning at Initialization. ICLR 2021. Soufiane Hayou, Jean-Francois Ton, Arnaud Doucet, Yee Whye Teh. Link.

♦ Stable ResNet. AISTATS 2021 (Oral presentation).Soufiane Hayou, Eugenio Clerico, Bobby He, George Deligiannidis, Arnaud Doucet, Judith Rousseau. Link.

♦ On the Impact of the Activation function on Deep Neural Networks Training. ICML 2019. Soufiane Hayou, Arnaud Doucet, Judith Rousseau. Link.

♦ Mean-field Behaviour of Neural Tangent Kernel for Deep Neural Networks (2020, submitted). Soufiane Hayou, Arnaud Doucet, Judith Rousseau. Link.

♦ On the Overestimation of the Largest Eigenvalue of a Covariance Matrix. (2017, Bloomberg). Soufiane Hayou

♦ Cleaning the Correlation Matrix with a Denoising AutoEncoder. (2017, Bloomberg). Soufiane Hayou

Experience

Contact

hayou( at )jhu.edu